Resilient MicroServices With Circuit Breaker Pattern

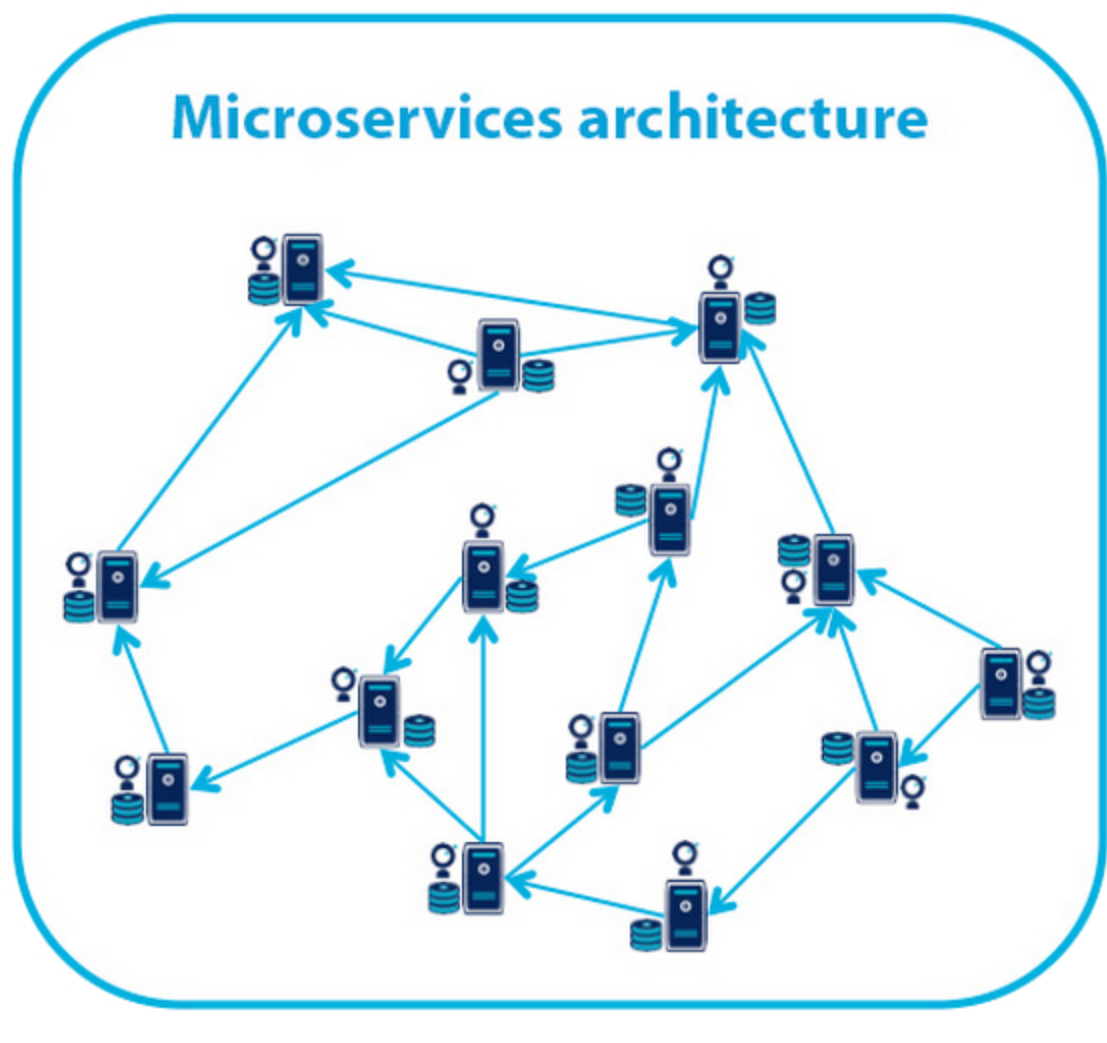

Microservice architecture brings in a lot flexibility with application development and deployment but introduces a new level complexity when it comes to handling transactions and inter-service communication.

The whole microservices architecture is like a huge web consisting of several services each talking to one or more of other services.

</img>

</img>

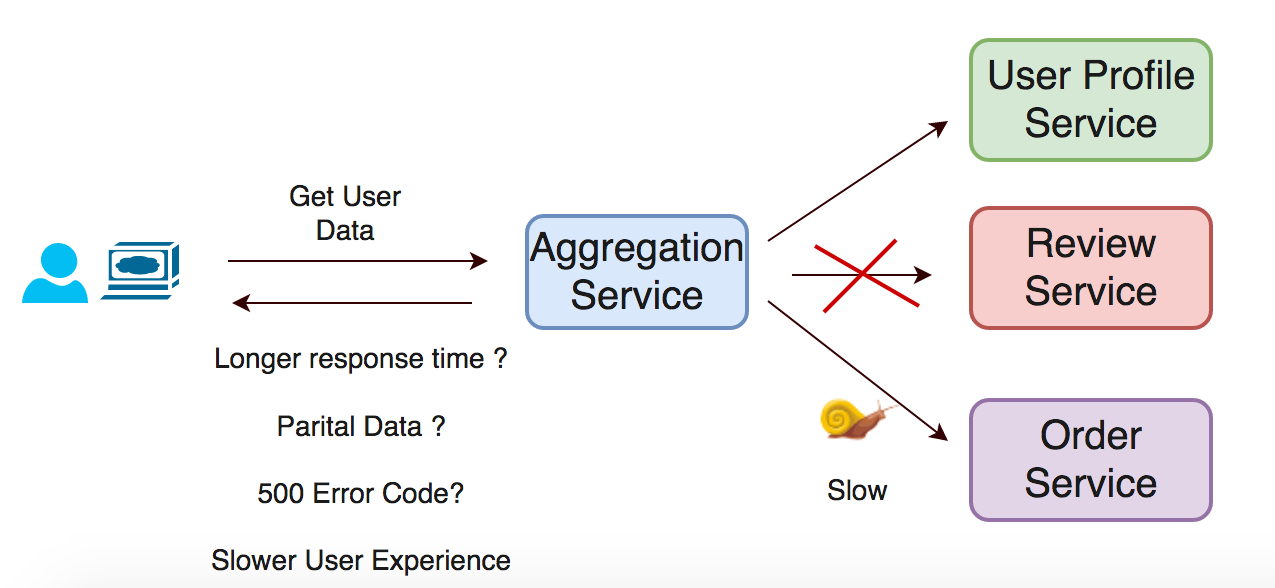

Each service has its own performance and reliability as Service Level Agreement (SLA) which in turn may be affected by the performance of a dependent services.

A front facing service could be held hostage if one or more of services it is dependent are unable to meet their SLA’s. This in turn would impact the SLA of the service and could end up providing a bad user experience for the users of the service.

It’s not just one API call or one user that we are talking about, it could be most it not all of the API calls that are coming to the service for different users.

</img>

</img>

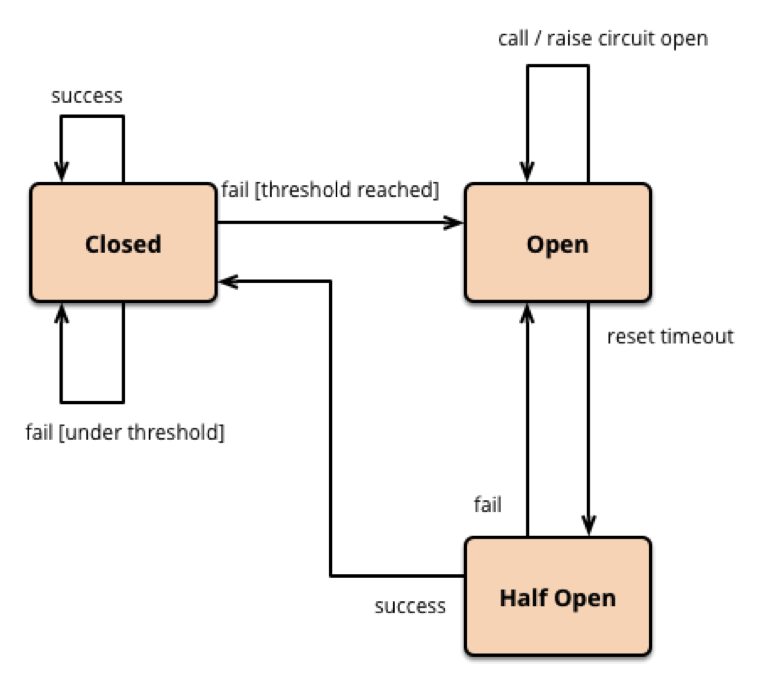

This is where the ‘Circuit Breaker’ design pattern comes into picture. The design pattern is similar to how an electrical circuit breaker works. The idea is to trip the circuit when when something bad happens thus preventing the issue from escalating and turning into a disaster.

The below diagram illustrates the design pattern as explained by ‘Martin Fowler’ in ‘Application Architecture’.

</img>

</img>

Key Parameters Affecting API SLAs in a MicroService Architecture:

-

Connection Timeouts

Happens when the client is unable to connect to a service within a given timeframe. This may be caused due to a slow or unresponsive service.

-

Read Timeouts

When the client is unable to read the results from the service within a given timeframe. The service may be doing a lot of computation or is using some inefficient way to prepare data to be returned.

-

Exception Caused Due To

-

Bad data sent to the service by the client

-

Service being down

-

Issue on the service

-

Issue on the client while parsing the response. Response change on the service and the client unaware of it.

Building Resilient Service

Netflix has built Hystrix, a library which implements the circuit breaker pattern. This library will help us build resilient services.

Key advantages of Circuit Breaker Pattern:

- Fail fast and rapid recovery.

- Prevent cascading failure.

- Fallback and gracefully degrade when possible.

Hystrix library:

- Implements the circuit breaker pattern.

- Provides near real time monitoring via. Hystrix stream and Hystrix dashboard.

- Generates monitoring events which can be published to external systems like Graphite.

Use Case: NewsFeed Aggregation Service

Overview:

NewsFeed Aggregation service is responsible to deliver data which will be used to render the ‘Recent Activities’ section of the page for an user. Recent activities is similar to facebook timeline which contains any updates/notification/activities that happened between the time the user was last on the site and now.

</img>

</img>

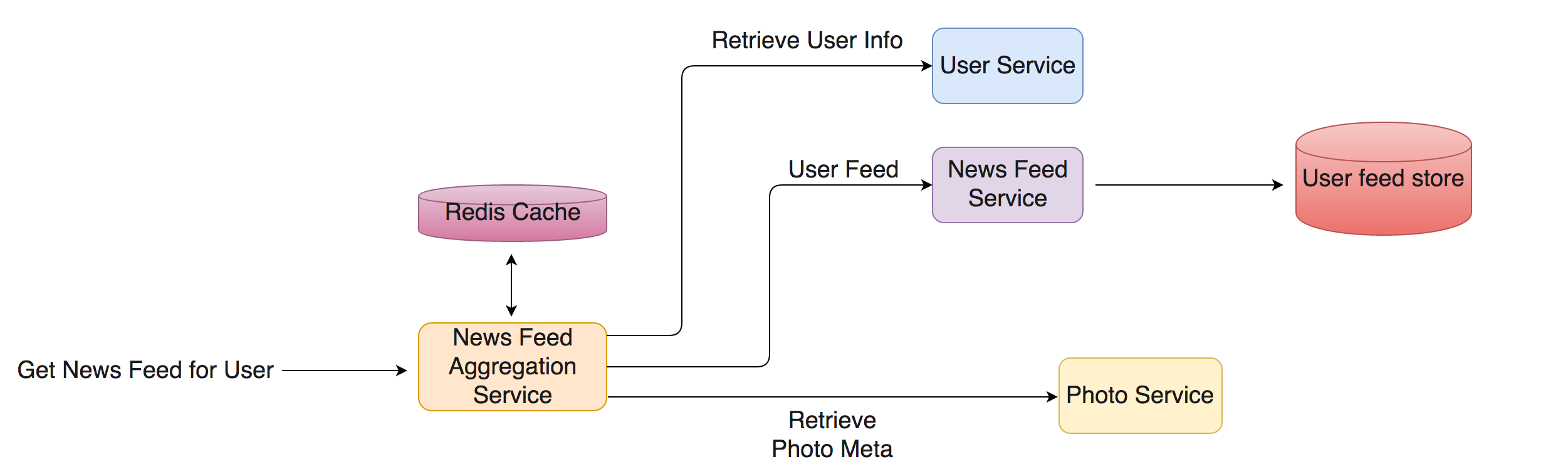

The aggregation service is dependent on 3 other microservices. News Feed service being the primary source of feed whose data is further enriched based on the information retrieved from user-service and photo-service.

The feed is shown on the landing page of the application and acts as a driver for user engagement on the site. Hence, it is of utmost importance that the operation to fetch the required new feed data is fast and reliable.

We neither want the user to see a long ‘loading…’ animation or see no data at all since. The backend services need to be highly resilient.

Application Flow Diagram: Before Integrating With Hystrix

Here’s a flow diagram indicating how the user feed is fetched and enriched. The Newsfeed Aggregation service has three clients, each talking to different services (news feed, user and photo service).

</img>

</img>

Each client has a certain ‘Read Time Out’ and ‘Connect Time Out’ associated with it. Let’s assume these are configured to be 3 seconds each. So, if dependent services don’t respond back in 3s or if the calling service is unable to establish a connection within 3 seconds, a read or connect timeout exception is thrown by the client.

In the happy path scenario, everything will work as usual and the dependent service will return data within some miliseconds. The application is able to retrieve the feed and return it to the user.

However, if one of the dependent services go down then every user will see at least a 3 seconds increase in the response time to return partial data or no data at all. The page will be slower and user experience not so good.

Application Flow Diagram: After Integrating With Hystrix

</img>

</img>

Using the Hystrix library, we can have the service execute a fallback operation when a certain threshold of failure rate for talking to an external service is reached. This is essentially a state where the circuit is considered open. As soon as the circuit is open, the service only executes the fallback operation and avoids reaching out to the external service for a certain cooldown period. Thus, saving the expensive 3 second timeout period for each API call to the external service.

After the cool down period, Hystrix will allow one request to go out to the external service, if this succeeds then the circuit will be closed again and all subsequent calls will reach out to the external service until the threshold for failure is reached next time.